Blog

Popular now

Lucidchart tips

Lucidchart tips9 pro tips for building process flows in Lucidchart

In Lucidchart, you can build process flows more quickly and effectively. Get started now with these tips and tricks, including creating a custom shape library, adding links to external documentation, and assigning a status to your document.Read more

Lucidchart tips

Lucidchart tipsSharing and collaboration in Lucidchart

Lucidchart was built for collaboration from the start. Learn how you can share your documents, work with others in real time, and embed your diagrams on another website.Read more

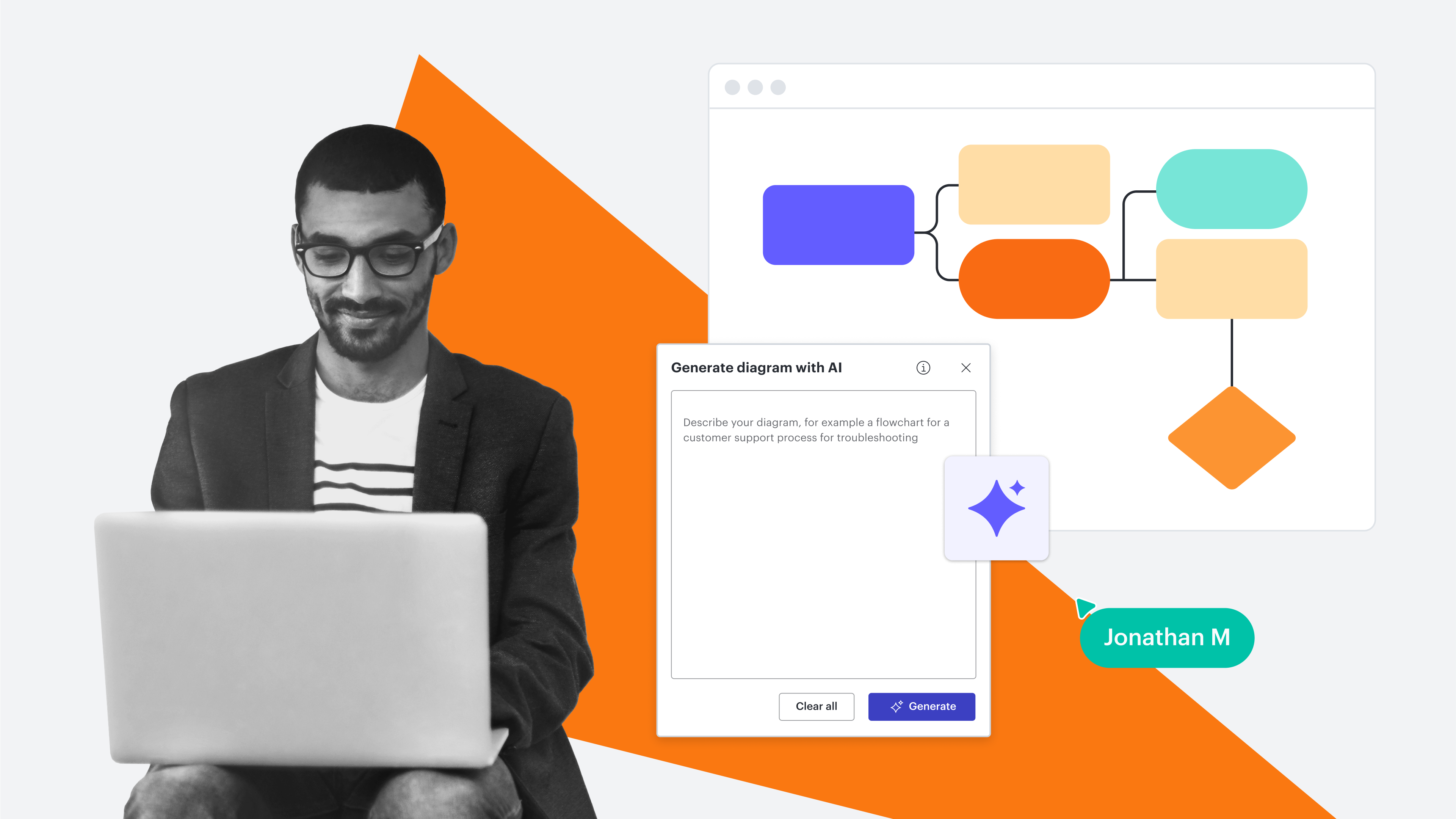

Lucidchart tips

Lucidchart tips4 tips for writing AI diagramming prompts

Learn the value of AI diagramming and get top tips on writing AI prompts to help you work more efficiently.Read more

Diagramming

DiagrammingHow to make wow-worthy Lucidchart diagrams

Level up your Lucidchart diagrams with these tips. Includes a free course!Read more

Powerful clarity for dynamic professionals

Learn how to bring teams together and build the future through intelligent visualization of people, processes, and systems.

How to insert diagrams in Google Docs

Need to create a flowchart or Venn diagram in Google Docs? With our Lucidchart add-on, you can easily insert diagrams and make your documents visual.

Topics:

What does HR actually do? 11 key responsibilities

Everyone knows that HR is an important department in your organization, but few employees know why. Read our in-depth description of what the HR department does (or what they should be doing) to meet the needs of employees.

Topics:

How to make a fishbone diagram in Word

Learn how to make a fishbone diagram in Microsoft Word. Use this guide to make a fishbone diagram directly in Word using Shapes or with the Lucidchart add-in. Templates included!

Topics:

How to make an org chart that’s more engaging and interactive

Lucid simplifies org charts, with features and tools that make them interactive, organized, and easy to maintain.

Topics:

4 common IT problems that Lucidchart solves

In this article, we’ll explore four common problems IT professionals face and how to mitigate them using Lucid.

Topics:

6 Salesforce templates for finance teams

In this article, we share a roundup of the six Salesforce templates for the Financial Services Cloud.

Topics:

What is a QBR in business?

QBRs keep customers and stakeholders engaged and invested. Learn what a QBR is and get free templates to hold your own.

Topics:

10 Lucidchart templates to help engineering teams reduce project disruptions

Here are 10 customizable templates to help your engineering teams stay productive and keep projects running smoothly.

4 steps to strategic human resource planning

Learn how to implement strategic human resource planning to find and keep the best talent.

Topics:

Conway’s Law explained: Why your output is only as good as your internal structures

This article will explore Conway’s Law, its pros and cons, and what it could look like for any given organization.

Topics:

How to make a data flow diagram in Word

Find out how to make a data flow diagram in Microsoft Word using the shape library and with Lucidchart add-in. Templates included!

Topics:

What is test-driven development?

Test-driven development can lead to greater efficiency and happier users. Learn all about it in this article.

Topics: